Thursday, January 11, 2007

Project Vision:

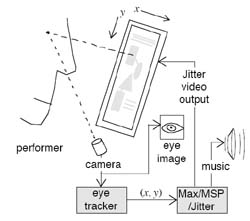

GB is an interactive project that works in real time, using a live feed which is enhanced by a Max/MSP/Jitter patch.

Project is about creating a digital space where users can make their own graphics and rhythms in real time.

GB allows users to have an audio/visual experience that is interactive and responsive.

As user moves, face position is tracked, position parameters are passed to a 3d object that is textured by the real time captured view.Changes of these parameters effect the rotation and the scale of the object.

Motion change in each splitted view produces a different note.

Moving in front of the cam with hands and head, each and every time a unique composition of sound and vision is formed.

How?

Max/MSP/Jitter and computer vision for jitter is used in the project.

Live camera input is processed using;

cv.jit.faces ~ face tracking

jit.gl.gridshape, texture, render ~ 3d vision

jit.scissors ~ splitting the view

Objects used;

cv.jit.faces: scans through a greyscale image and tries to find regions that resemble frontal views of human faces

jit.gl.gridshape: generates simple geometric shapes as a connected grid, creates one of several simple shapes laid out on a connected grid (sphere, torus, cylinder, opencyclinder, cube, opencube, plane, circle). These shapes may be either rendered directly, or output as a matrix of values.

jit.gl.texture: create an OpenGL texture from a matrix or a file.

jit.scissors: cuts a 2-dim matrix into a specified number of rows and columns and outputs those regions as smaller matrices.

makenote: Supply note-offs corresponding to note-on messages.

GB is an interactive project that works in real time, using a live feed which is enhanced by a Max/MSP/Jitter patch.

Project is about creating a digital space where users can make their own graphics and rhythms in real time.

GB allows users to have an audio/visual experience that is interactive and responsive.

As user moves, face position is tracked, position parameters are passed to a 3d object that is textured by the real time captured view.Changes of these parameters effect the rotation and the scale of the object.

Motion change in each splitted view produces a different note.

Moving in front of the cam with hands and head, each and every time a unique composition of sound and vision is formed.

How?

Max/MSP/Jitter and computer vision for jitter is used in the project.

Live camera input is processed using;

cv.jit.faces ~ face tracking

jit.gl.gridshape, texture, render ~ 3d vision

jit.scissors ~ splitting the view

Objects used;

cv.jit.faces: scans through a greyscale image and tries to find regions that resemble frontal views of human faces

jit.gl.gridshape: generates simple geometric shapes as a connected grid, creates one of several simple shapes laid out on a connected grid (sphere, torus, cylinder, opencyclinder, cube, opencube, plane, circle). These shapes may be either rendered directly, or output as a matrix of values.

jit.gl.texture: create an OpenGL texture from a matrix or a file.

jit.scissors: cuts a 2-dim matrix into a specified number of rows and columns and outputs those regions as smaller matrices.

makenote: Supply note-offs corresponding to note-on messages.

Friday, November 17, 2006

fingerplay is basically a sample player. webcam is used to track movement (of the actors hands) and analyse these movements. the resulting parameters are converted to suitable control parameters for sampling engine to achieve a touchless audio control system in the tradition of the theremin.

fingerplay

Friday, November 10, 2006

Thursday, November 09, 2006

General Resources, Max Patches and Objects, Max-friendly Hardware, Max Learning Materials, Selected Projects Using Max/MSP/Jitter

http://www.cuttlefish.org/maxmspjitter.html

http://www.cuttlefish.org/maxmspjitter.html

Cv.jit

Pelletier's toolkit, which is the most feature-rich of the three vision plug-ins(David Rokeby's SoftVNS, Eric Singer's Cyclops, and Jean-Marc Pelletier's CV.Jit) is also the only which is freeware.

Cv.jit is a collection of max/msp/jitter tools for computer vision applications. The goals of this project are to provide externals and abstractions to assist users in tasks such as image segmentation, shape and gesture recognition, motion tracking, etc. as well as to provide educational tools that outline the basics of computer vision techniques.

http://www.iamas.ac.jp/~jovan02/cv/

Cyclops Realtime Video Analyzer:

Through a simple interface, you can track performers and users with a video camera and analyze greyscale and color information from the live image. You can use the resulting Cyclops data to control MIDI, audio, video and anything else that you can do in Max.

How Cyclops Works

Cyclops receives live video from a QuickTime input source and analyzes each frame of captured video in real-time. It divides the image area into a grid of rectangular zones and analyzes the zones for greyscale, threshold, difference (motion) and color. Cyclops allows you to specify the grid resolution, target zones for analysis and indicate the type of analysis to be performed in each zone. Cyclops outputs messages for each analyzed video frame that can be used to trigger any Max processes or control any patch parameters.

http://www.cycling74.com/products/cyclops

Through a simple interface, you can track performers and users with a video camera and analyze greyscale and color information from the live image. You can use the resulting Cyclops data to control MIDI, audio, video and anything else that you can do in Max.

How Cyclops Works

Cyclops receives live video from a QuickTime input source and analyzes each frame of captured video in real-time. It divides the image area into a grid of rectangular zones and analyzes the zones for greyscale, threshold, difference (motion) and color. Cyclops allows you to specify the grid resolution, target zones for analysis and indicate the type of analysis to be performed in each zone. Cyclops outputs messages for each analyzed video frame that can be used to trigger any Max processes or control any patch parameters.

http://www.cycling74.com/products/cyclops

About computer vision and interactive art :

http://www.flong.com/writings/texts/essay_cvad.html

The various computer vision plug-ins for Max/MSP/Jitter, such as David Rokeby's SoftVNS, Eric Singer's Cyclops, and Jean-Marc Pelletier's CV.Jit, can be used to trigger any Max processes or control any system parameters. Pelletier's toolkit, which is the most feature-rich of the three, is also the only which is freeware.

CV.Jit provides abstractions to assist users in tasks such as image segmentation, shape and gesture recognition, motion tracking, etc. as well as educational tools that outline the basics of computer vision techniques [Pelletier].

http://www.flong.com/writings/texts/essay_cvad.html

The various computer vision plug-ins for Max/MSP/Jitter, such as David Rokeby's SoftVNS, Eric Singer's Cyclops, and Jean-Marc Pelletier's CV.Jit, can be used to trigger any Max processes or control any system parameters. Pelletier's toolkit, which is the most feature-rich of the three, is also the only which is freeware.

CV.Jit provides abstractions to assist users in tasks such as image segmentation, shape and gesture recognition, motion tracking, etc. as well as educational tools that outline the basics of computer vision techniques [Pelletier].

Wednesday, November 01, 2006

Project Description

GraphBeat project is about creating a digital space where users can make their own graphics and rhythms by live video tracking.User will form a visual and aural composition.

Objectives

The main objective of this project is developing a platform where users can create their own unique graphics and musics.

Goals

Max/Msp CV.Jit, tracking live video input

Forming specific rhythms

Creating and programming an interactive platform

Challanges

Project will be done using Max/MSP/Jitter. I have to learn Max.

Scope

Objects each having unique rhythms can be added and with the interaction between user and the objects can form a new composition.

Project Schedule

10 - 15.11 ~ Max/Msp CV.Jit, tracking live video input

15 - 25.11 ~ organizing visuals, rhythms, programming

25 - 30.11 ~ simple demostration of the system

01 - 10.12 ~ simple working demostration of the system

Project pdf and Gantt chart http://160.75.53.220/ozhararb/GraphBeat.pdf